Today’s computer viruses can copy themselves between computers, but they can’t mutate. That might change very soon, leading to a serious crisis.

Research on program synthesis is proceeding quickly, using neural reinforcement learning. With a similar approach, researchers in 2017 showed that a computer can teach itself to master chess and go, entirely from scratch.

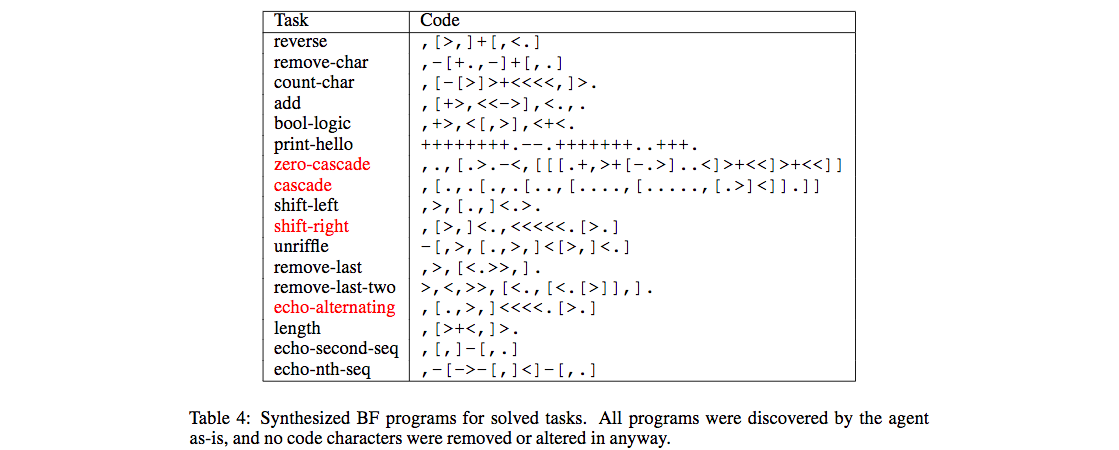

The programs currently being generated are very small: dividing two numbers, reversing a string of letters, etc. It could be that there’s another chasm to cross before programs for more interesting tasks can be generated. But what if there’s not?

Consider the problem of automatically generating a program that can install itself on another computer on the network. To make this work, the statistical process generating the program needs some way to know which partial programs are most promising. Metaphorically, the “AI” needs some way to “know” when it’s “on the right track”.

Imagine you were the one generating this program, and you knew nothing except that it should be a sequence of characters. Even knowing nothing, if you could get feedback on each character, you’d quickly be able to get the result through sheer trial and error. You would just have to try each character one-by-one, keeping the character that performed best. If you only got feedback on each character pair, your life would be harder, but you’d still get there eventually. If feedback only came after each complete line, you probably wouldn’t get to the answer in a human lifetime.

The simple point here is that the task of generating malware automatically will be difficult if the “reward” is all-or-nothing — if we only know we’re on the right track once the program is fully successful. If partial success can be rewarded, it will be much easier to find the correct solution.

It might be that there are lots of obvious, intermediate rewards that make generating malware very easy. Exit without error, connection to the target IP, long and varied communication all seem like good signs that the program is on the right track.

If it turns out that writing a virus is much easier than mastering chess, we could be in a lot of trouble. Every time the virus infects another computer, the new host can be put to work improving and spreading the malware. The improvements apply to the process of generating the malware, rather than any single exploit. Once the attack gains momentum, it could be finding and exploiting new vulnerabilities faster than they can be patched. Vulnerabilities could be uncovered and exploited at any level, including low-level firmware.

People love getting into philosophical debates about the plausibility of “artificial general intelligence”. This is as fine a hobby as any, but we shouldn’t lose sight of the fact that mutating self-replicating systems don’t need anything like “general intelligence” to pose a huge threat.

For the first big AI safety crisis, think robot flu, not robot uprising.